For the first time, a team of neuroscience researchers have demonstrated the ability to fly and manoeuvre a remote-controlled helicopter using only their thoughts.

Well ... thoughts and a sophisticated "brain-computer interface" that detects those thoughts, interprets them as control signals, and directs the helicopter accordingly.

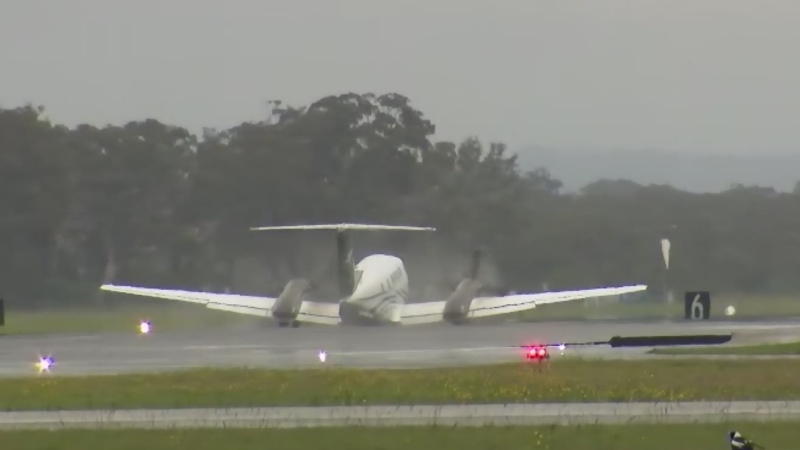

A YouTube video of the experiment shows the four-rotor "quadcopter" as it is navigated up, forward, left and right -- and even through hoops suspended from a gymnasium ceiling -- by a pilot wearing a cap fitted with 64 electrodes.

"Imagine making a fist with your right hand, it turns the robot to the right," says Karl LaFleur, a graduate student who worked on the project.

"And if you imagine making a fist with both hands, it moves the robot up," adds Alex Doud, another researcher in the video.

The project took place under the leadership of Prof. Bin He, at the University of Minnesota College of Science and Engineering.

In the YouTube video, a narrator explains the process, which requires no physical movement whatsoever.

"When the controller imagines a movement, without actually moving, specific neurons in the brain's motor cortex produce electric currents. These currents are detected by electrodes in an EEG, which sends signals to the computer. The computer translates the signal pattern into a command and beams it to the robot via Wi-Fi," the narrator explains.

The process is non-invasive, requiring no implants or chips to detect the brain signals and translate them into commands.

And it has significant potential for the treatment of people with disabilities -- both mental and physical, Prof. He said.

"This brain-computer interface technology is all about helping people with a disability or various neurodegenerative diseases, to help them regain mobility, independence and enhance performance," He said in the video. "We envision they could use this technology to help control wheelchairs, artificial limbs or other devices."

The video accompanied a study, published Tuesday in the Journal of Neural Engineering.

Five people, aged 21-28, were in included in the study. Each was first trained to "modulate their sensorimotor rhythms" to control and navigate the drone in a 3-D environment.

The chopper was equipped with a camera mounted on its nose, and the subjects -- or pilots -- faced a screen that displayed the feed from the camera.

After several training sessions,the subjects were scored as they flew the helicopter through two foam rings. Their score was based on the number of times they successfully navigated through the rings, the number of times they collided with the rings, and the number of times they flew outside the boundaries of the course.

The five subjects were able to pilot the craft with an accuracy rate ranging from 69.1 per cent,up to 90.5 per cent.