In the era of disinformation, social media users are forced to take an increasingly skeptical eye to their feeds in order to separate fact from fiction.

But the latest trend in online manipulation tests the fundamental belief of “seeing is believing.”

Deepfakes -- videos that have been manipulated using artificial intelligence (AI) -- could become the next big threat to truth, according to experts.

The technology is used to generate falsified videos by either swapping one face for another, or by allowing someone's motions and voice to be mapped onto another person. In other words, it allows people to fabricate videos of people – or, more problematically, politicians – saying things they never said, or doing things they never did.

Experts say these videos could pose a very real risk when placed in the hands of those who aim to sway public opinion -- especially in the midst of a federal election.

“The architects of these sorts of online manipulated media often play upon the fears and biases of a certain subset of the electorate that they are trying to impact,” John Villasenor, professor of engineering at UCLA and senior fellow at U.S.-based think tank Brookings Institution, told CTVNews.ca by phone from Los Angeles Monday.

“If you happen to believe that a particular candidate is terrible, then you will be more willing to give credence to a video that confirms that bias.”

Deepfakes have already made their way into the political sphere in the U.S., the most notable example being a doctored video of House of Representatives Speaker Nancy Pelosi attacking President Donald Trump being altered to look like she was slurring her words.

Though Villasenor referred to this video as more of a “cheapfake” than a “deepfake” because it was only altered to affect Pelosi’s speech, it was still viewed more than two million times, sparking outrage and suggestions Pelosi was drunk.

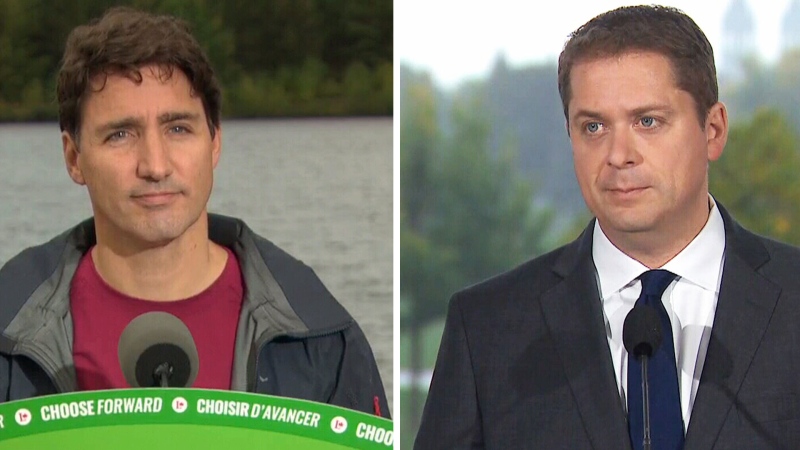

Canadian politicians have also been targeted by deepfakes.

In June, a YouTube user under the name “Fancyscientician” posted multiple deepfakes featuring Conservative Leader Andrew Scheer and Ontario Premier Doug Ford. One of the videos featured Scheer’s face dubbed over a 1980s public service announcement about drug use, presented by Pee Wee Herman.

Though the videos err on the side of humour, rather than deception, the Communications Securities Establishment (CSE), Canada’s cybersecurity agency, warns that deepfakes are a threat to political parties and candidates.

The agency included deepfakes in the 2019 update to the Cyber Threats to Canada’s Democracy report noting, “Foreign adversaries can use this new technology to try to discredit candidates, and influence voters by, for example, creating forged footage of a candidate delivering a controversial speech or showing the candidate in embarrassing situations.”

While Villasenor says that the public needs to be more discerning of the content they view on social media, he warns that an increase in fake videos could lead more people to call authentic videos into question.

“We’re moving into an era where we all need to keep in mind that just because we see something on video doesn’t mean it actually happened in how it appears in the video,” said Villasenor.

“[But] another side effect of this is that things that actually happened can be called into question by someone that might want to deny the reality of the event. It not only offers opportunity for people who might want to fabricate things; it undermines our understanding of truth from both directions.”

While the risk of deepfakes popping up throughout the federal election campaign is real, not all experts agree the tech poses the risk of swinging Canada’s election results.

“Deepfake technology has the ability to create chaos, especially if it was targeted ata high profile politician in a tight race. However, the technology is still not quite sophisticated enough to justify the cost,” Claire Wardle, executive chair at First Draft, an organization working to address the challenges of disinformation, told CTVNews.ca by emailTuesday.

“There are many cheaper (read: free) ways to cause harm.”

Wardle noted that manipulated audio clips also present a significant risk moving forward.

“We're much more trusting of our ears and it's easier to fool people than video, where the quality has to be incredibly high,” Wardle said.

Contact us

See a story or post circulating on social media that you think may be disinformation or in need of fact-checking?

Let us know by sharing with us the link to the post or the source of the information.

Email us by clicking here or visit our Newsbreaker page.

Please include your full name, city and province.