With approximately 10 Canadians dying by suicide every day, the federal government is investing in a new pilot project that could one day use artificial intelligence to predict spikes in suicide risk across the country.

Advanced Symbolics Inc., an artificial intelligence service company, is set to begin a pilot project that will scan random samples of social media to try to find the red flags that indicate that a particular area of the country could be headed for a spate of suicides.

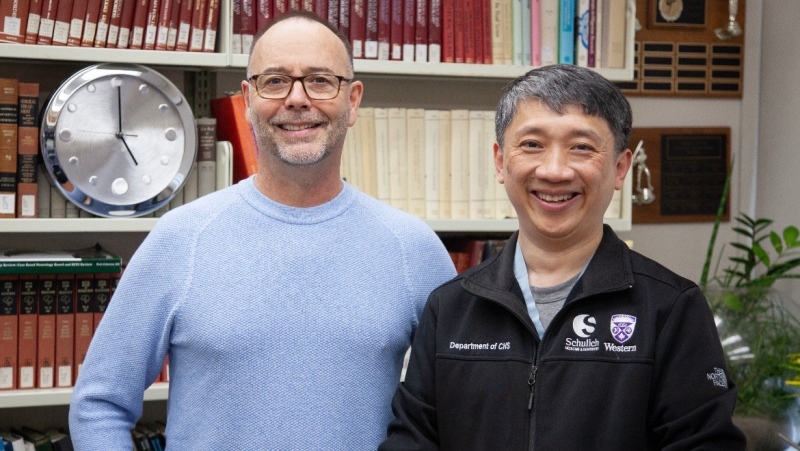

The project, expected to start in early February, will begin by examining previous suicide hot spots to look for patterns, explained the chief scientist with Advanced Symbolics, Kenton White.

“We’re taking past data from areas that have had high incidents of suicide and we’re looking to see if we can detect behaviours or patterns that led up to those tragedies,” White told CTV’s Your Morning from Ottawa Thursday.

“If we can find those patterns, then perhaps in the future, we can forecast where these hotspots might be and provide resources and other help to those regions before the tragedies strike.”

White stressed the system is not designed to identify individuals who want to kill themselves; instead, the system is focused on regions where suicides are most likely, using randomly chosen samples of social media in the search.

For example, three teenagers in Cape Breton Island died by suicide last year. To try to prevent similar tragedies, the AI program would look for patterns that could have potentially predicted those deaths.

White says, at this point, it’s not clear what red flags the system will look for; that’s exactly what the pilot project phase will work to identify.

“We’re not telling the computer, ‘This is what you look for.’ Instead, our AI program – her name is Polly – is going to be reading people’s posts from social media and other online sources leading up to these events and figuring out what the patterns are on her own,” he said.

The three-month-long pilot will focus on determining if such patterns can be identified at all. If that is successful, the federal government will then determine whether to move ahead with beginning regional suicide surveillance.

White said maintaining privacy is a paramount concern which is why his company uses tools similar to what the federal government uses with census data to ensure privacy.

“We also have additional safeguards in place to make it very difficult or even impossible for our own staff to look in and see who is having issues and finding out who that person is,” he said.

White concedes that even the greatest suicide predictor tool in the world will not be enough to prevent deaths, if there aren’t enough resources to help communities struggling with depression, substance abuse, and other problems that lead to suicide.

“This isn’t going to replace all the great people we already have on the ground working on this really important problem. What we’re trying to do is to provide early warning to get more resources into places where they’re needed,” he said.

That could mean staffing more suicide helplines, moving mental health workers and social workers into a community, or even running more public service announcements about how those with depression can seek help.

“So it’s to help us be more efficient with the resources we do have,” White said.