A massive study on driverless cars asked participants which types of people or animals should be struck over others during unavoidable collisions.

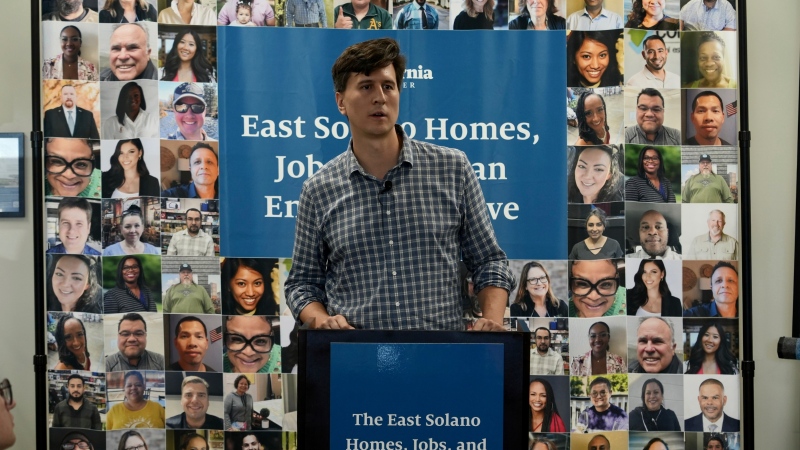

Researchers, led by MIT postdoc Edmond Awad, asked participants to answer a broad series of questions as if they were behind the wheel.

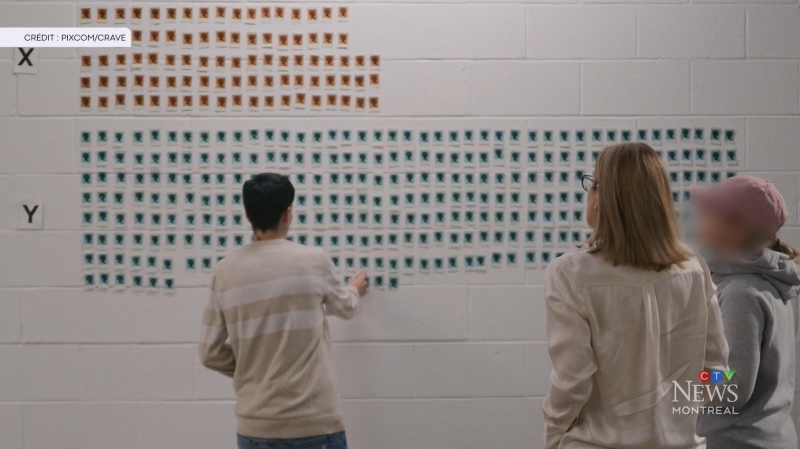

Participants were randomly given 13 binary choices on two visual slides. Each posed different combinations of people or animals that would be either struck or saved. People’s responses and their cultural background were then collected for their study.

One of the questions, which can be found online, asked if they would rather hit several elderly people crossing the street illegally or a homeless man crossing legally? Another question asked if a group of male doctors should be chosen over female ones?

These types of split second decisions and how those decisions could impact programmers of driverless or autonomous vehicles (AVs) were all analyzed in a new paper entitled “The Moral Machine Experiment.” The study was published in Nature on Wednesday.

Azim Shariff, a study co-author, told CTVNews.ca that their “cartoonish” binary choices could be programmed into future AVs in small maneuvers they could take to redistribute risk to avoid collisions and hitting people entirely.

“Treating people equally has a moral appeal and technological appeal — because it’s the simplest thing to do,” he said. But if cars were to treat people differently, AV sensors would need to be calibrated to sense different ages or other criteria, which he says would open another can of worms.

In the study, researchers asked respondents to face variations on the classic Trolley problem — which asks “would you kill one person to save five others?” The goal was to better understand how people thought autonomous cars should react to real-world situations if and when they’d be on the road.

Shariff noted that Canada ranked first of all 233 countries when it came to being the most utilitarian. In other words, Canadians had the strongest overall preference in saving more people compared to fewer people.

“(Canada) prioritized that dimension the most,” he said, adding the United Kingdom was a close second. He noted this was interesting given that utilitarian thought originated in Britain.

The researchers gathered responses from over two million participants and the team found that young people were generally chosen over older ones. It also found that participants would rather save more people than fewer, that humans were chosen over animals and pedestrians were spared over passengers.

Jaywalkers, young treated differently based on country

But there were definitely differences across cultural lines.

For example, people in Asian and Middle-Eastern countries would rather have young people take the hit to save an elderly life. But that was not the case for participants in Latin and Western countries who opted to save younger pedestrians instead.

If you’re a jaywalker in most countries, you’re not going to be chosen over lawful people crossing the street — unless you’re in a less affluent country, where people are far more forgiving towards jaywalkers.

For a full breakdown, the researchers have a website dedicated to examining how respondents in each surveyed country spared jaywalkers, the elderly or people of higher status.

When looking at overall preferences, Canada was most closely related to the U.K. and most dissimilar to Brunei.

Shariff said the “cultural continuities” between Canada and the U.K made sense given how much influence Britain has had on the development of modern-day Canada.

When it came to sparing the rich, Canadian respondents ranked 46th out of the 233 countries, which was fairly similar to other western countries such as the United States.

Shariff said, in the future, there will be different degrees of pushbacks or AV criticism depending on where people live and who programmed the vehicles.

“Imagine you design a car in California by a bunch of Westerners … and then you tried to sell that in India, Nigeria or New York City where people’s attitudes towards jaywalkers are different,” he said, hypothesizing consumers would think: “I can’t buy a car programmed that way.”

While people in similar scenarios in real life collisions rely heavily on gut decisions, one of the key goals of this research is to better teach autonomous cars.

But then the question becomes: how much power should we be giving our machines over human lives?

How inventors and programmers answer that could drive people to fear where they would stand in a hierarchy of computer choices. This is especially true when driverless cars could literally decide whether you live or die based on how their computations ranked you compared to someone else.

In July, a German ethics commission made up of religious and legal experts, examined dilemmas for AVs in greater detail and made a series of guidelines. They largely matched the study’s findings such as prioritizing human lives over animal ones.

But unlike the outcomes that participants chose in the survey, the commission suggested AVs shouldn’t take into account age, gender or mental ability.

“There seems to be this gulf between what elite professional ethicist class was saying and what (participants) were saying,” Shariff said, adding that gap would have to be bridged for AVs to be more widely accepted.

The researcher, who didn’t participate in the commission, added that the German advisors said AVs should also never swerve to involve people not involved in a potential collision.

“But that was something our participants didn’t care about. They were fine with them swerving away,” he said. But Shariff mentioned that lawyers he spoke to said, from a legal perspective, not swerving leads to the least legally liable situation for AV manufacturers given current laws.

If you wish to take the test for yourself, check it out here.