In many ways, video sharing app TikTok is a home like any other for content that entertains, informs and inspires. It's a place where a user might discover their favourite new pasta recipe, fitness routine, author, artist or hobby.

But there's also a darker side – a phenomenon in which some TikTok users seem to routinely injure and sicken themselves in a bid to make viral content for the app.

For example, TikTok is the birthplace of the "blackout rage gallon," a gallon-sized mixed drink designed to get partygoers extremely intoxicated off of liquor, water and flavoured electrolyte drink enhancers. In all, videos tagged with "borg" have amassed more than 544 million views on TikTok.

Another trend that surfaced sometime around 2020 has landed some kids in the hospital with injuries ranging from broken bones to concussions, and led to legal action and criminal charges for others.

The "Skull Breaker Challenge" is a prank popularized on TikTok in which a victim is told to jump as high as they can for a video, only to have their feet kicked out from under them once they're off the ground, causing them to fall down violently.

TikTok has since removed hashtags associated with the challenge, but videos of people participating in the challenge are easy to find online.

In 2022, police departments around the U.S. reported increases in Kia and Hyundai thefts after a TikTok video showing how to hotwire the cars with a USB cord and a screwdriver went viral. In October that year, police in New York said a car crash involving a stolen Kia that left four teenagers dead may have been linked to the "Kia challenge" trend.

All of this begs the question: Why does TikTok appear to lead so many users toward harmful behaviour?

To understand the answer, experts say it's important to understand a little about how the app makes money and how social media algorithms work with human psychology.

Social media platforms like Facebook, Instagram, Twitter and TikTok generate revenue by gathering data about their audiences for use in targeted advertising on those apps, explained Brett Caraway, who heads the Digital Enterprise Management program at the University of Toronto's Institute of Communication, Culture, Information and Technology.

"By and large, the vast sums of revenue that these apps accrue are coming from advertising," Caraway told CTVNews.ca in a phone interview. "And there's a big difference between the advertising of today and the advertising of, say, 20th-century broadcast media."

More user engagement translates to more revenue, and within each app, algorithms promote content that drives the type of engagement most valuable to the parent company's bottom line. On Facebook, Caraway explained, posts that generate "likes," comments and re-posts are valuable, as are the niche social networks – groups, pages and events – that drive interaction with these posts.

"(TikTok) is not so much interested in social networks," Caraway said. "What it's really trying to do is figure out how to keep users engaged as long as possible and engaging in the scrolling, because that's how they're able to sell their property, their platform, to advertisers."

While the inner workings of TikTok's algorithms are a closely-held trade secret, almost anyone who has spent time on the app knows how quickly a user can be sucked into hours of scrolling through entertaining, easily-digestible low-commitment content. So how does TikTok's business model make the platform such a fitting home for risky, attention-seeking trends?

"It's kind of the interplay of two things," Caraway said. For one thing, content that is cheap to produce, entertaining and easily-digested tends to be of lower quality. In exchange for quality, that content might offer shock value.

"The content that gets the most engagement is not the thoughtful, long essay. What gets shared is 'man bites dog,'" Caraway said. "It's the scandalous. It's the salacious."

Second, by feeding users more of the content they've already shown interest in, the app creates a feedback loop with its audience. That audience has grown to more than one billion people, and most of them are teenage and young-adult users, many of whom Caraway described as "highly engaged content producers."

For the users who generate this content, the rewards can include validation, popularity, fame, gifts and even money.

"So the content is young people dancing, it's meme-inspired, self-deprecating humour, it is young people with grievances or with mental health issues that they're trying to connect with other people," he said. "There are also people…that are just prone to make bad decisions when they're young."

TIKTOK’S RESPONSE

TikTok maintains it has robust measures in place to screen and remove content that violates its community guidelines or depicts or promote dangerous behaviour. In a statement emailed to CTVNews.ca on Thursday, a spokesperson said the company has created technology that can alert its safety teams to "sudden increases in violating content" linked to hashtags to help detect potentially harmful trends.

"As our Community Guidelines clearly state, content that promotes dangerous behaviour has no place on TikTok and we will remove any content that violates those guidelines," the spokesperson wrote. "We strongly discourage anyone from engaging in behaviour that may be harmful to themselves or others."

According to the spokesperson, TikTok does not show videos of known dangerous challenges in search results, and provides resources for parents, guardians and educators about how to guide teens in assessing the safety of online challenges

Whether or not TikTok's algorithm rewards risky, shocking, salacious or harmful behaviour – in other words, content that keeps users glued to the app for hours – Sara Grimes says it wouldn't be the first to do so.

Grimes is director of the University of Toronto's Knowledge Media Design Institute. Before TikTok popularized the "Kia challenge" or "blackout rage gallon," Facebook, YouTube and meme sites popularized the "Tide Pod challenge," she pointed out. And before YouTube, people pulled stunts and pranks in front of their friends and peers.

"There have been countless of these fads, and they kind of pick up on much older traditions," Grimes told CTVNews.ca in a phone interview. "But they were localized before because there was no way to spread that to a billion people. Now, there is."

But is TikTok truly a neutral platform that reflects only the content users want to see? Conspiracy theories circulating online claim otherwise.

THE 'CHINESE WEAPON' THEORY

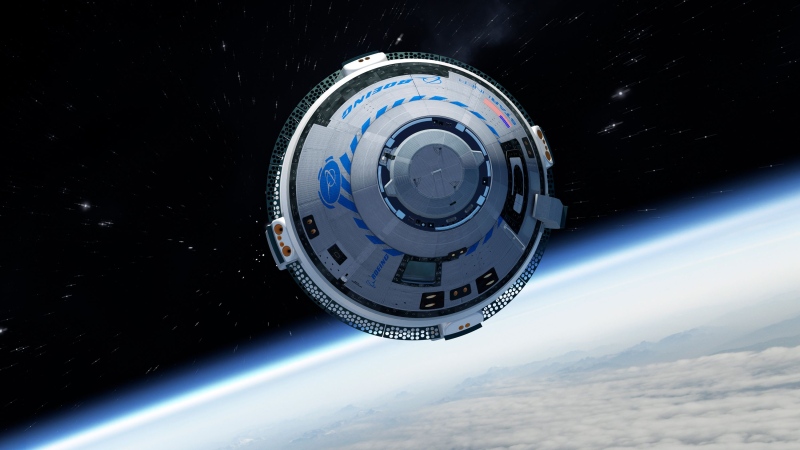

TikTok's parent company, ByteDance Ltd., is based in Beijing, China.

As Canada and the U.S. continue to trade barbs with the Chinese government over a long list of issues including alleged spy balloons and election interference, a theory has emerged that the video-sharing app might be a weapon designed by the Chinese government to weaken Western society.

On Feb. 28, the Government of Canada joined the United States and the European Union in banning the app on federal government-issued devices over privacy and security concerns. Most provincial governments then followed suit.

A few days earlier, tabloid newspaper The New York Post published an editorial with the headline "China is hurting our kids with TikTok but protecting its own youth with Douyin."

The article repeated a theory shared in online forums and blogs that the Chinese government – conspiring with ByteDance – is using TikTok to corrupt youth in rival Western countries, diverting their attention away from more important things and encouraging them to harm themselves and others. Meanwhile, the theory continues, TikTok's Chinese counterpart Douyin is intentionally programmed to nurture, encourage and educate youth in China.

Neither Grimes nor Caraway has seen or heard of any evidence TikTok is designed to harm users in Western countries.

In fact, Caraway believes the theory is a product of anti-Chinese rhetoric surrounding the latest tensions between Western allies and China.

"I do think that a lot of the focus on TikTok is because of an escalation in tension between the United States and China," he said. "And Canada is part of this, too."

Months before the first Chinese-operated high-altitude balloon was spotted in North American airspace this year, the Canadian and Chinese governments were at loggerheads over Canada's "serious concerns around interference activities in Canada."

While the Chinese government is strongly suspected by Canada and the U.S. of having tried to gain access to state and trade secrets, conducting online misinformation campaigns and using social media to interfere in Canadian and U.S. elections, Caraway said it's less likely the state is actively campaigning to corrupt Western youth through TikTok.

If the app's algorithms are unintentionally harming users and its parent company appears slow to react, Grimes said that's more likely the result of a business decision than a state conspiracy. For example, TikTok apologized in 2021 to Black content creators who felt "unsafe, unsupported or suppressed" only after what Grimes described as "years of complaints" by Black users that they were being censored on the app.

"(TikTok) claimed they don't know how it happened, and that they would do better," he said. "So we know that they have some problems with their algorithm already, because we have this example of them publicly apologizing for…an anti-Black component of how their feed was designed."

Similarly, Facebook apologized in 2021 for a flaw in its Instagram app that meant it promoted weight-loss content to users with eating disorders. Grimes pointed out that the apology came only after whistleblower Frances Haugen shared internal documents that she said showed Facebook knew its algorithms were harming teens with eating disorders, but chose to prioritize growth and engagement over their wellbeing.

"So it's not just TikTok that we need to be worried about," Grimes said.

"It's the whole ecosystem for social media technologies. These social media platforms, they've been allowed to just do what they want with the data."