TORONTO -- Data experts are cautioning already on-edge Canadians against taking Ontario's dire predictions about COVID-19 deaths literally, even as the revelation of stark data coincided with more physical distancing measures and impassioned pleas by government and health officials to stay home.

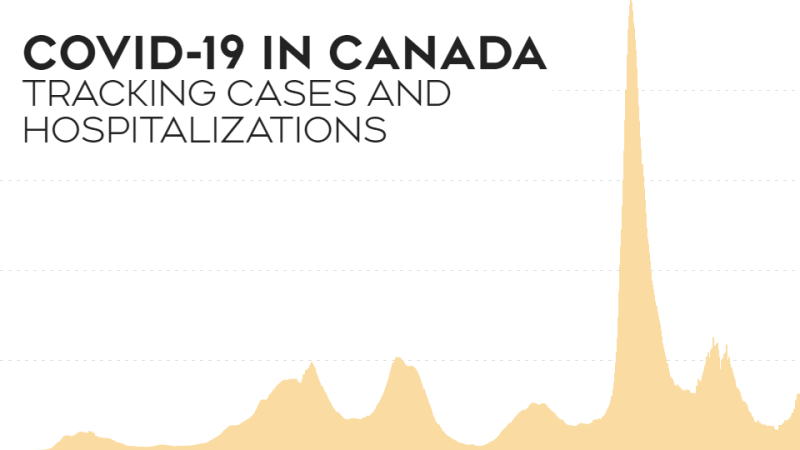

In presenting the data Friday, the president and CEO of Public Health Ontario said staying home could be the difference between 6,000 deaths by April 30 or 1,600 deaths. Deaths could drop to 200 if further measures are brought in, said Dr. Peter Donnelly.

Officials also offered a glimpse at what might happen over the length of the outbreak, which could stretch from 18 months to two years, but cautioned those scenarios become less certain the further into the future they are set.

If Ontario had not enacted various interventions including school closures, up to 100,000 people would die from COVID-19, said Donnelly. But with various public health measures, deaths could number between 3,000 and 15,000, he said.

Pandemic experts say such projections are not really meant to predict the future, but rather to provide a general guide for policy-makers and health-care systems grappling with a growing pandemic.

Ideally, the information should also assure average citizens that their individual actions can make a difference, said University of Toronto epidemiology professor Ashleigh Tuite.

"That's really important feedback to share not just within government, but with the population at large because everybody has a really huge investment in this," says Tuite, who has created her own projections for the spread of COVID-19.

"The answer may be it's going to take longer than we thought. And although that's not the desired answer, it's a possible answer. Communicating that (is) going to be really critical, especially if we're looking at longer time horizons."

Provincial health officials have urged the public to "bear down hard" on isolation measures meant to break the chain of COVID-19 transmission, pointing to best- and worst-case scenarios they say largely depend on compliance.

The data was quickly followed by news of more closures, making it clear why Ontario was suddenly pushing out the warning, says Tuite.

"We're in a situation where we need buy-in from everybody. And so I think treating people like adults and having these conversations and explaining what we know and what we don't know -- and where we're learning and where we potentially failed -- I don't think that that's a bad thing. As a society, we need to have that dialogue."

Various assumptions were used to make Ontario's model, and Donnelly cautioned that "modelling and projecting is a very inexact science."

"In the early days of an epidemic it's all about providing an important early steer to policy-makers, about what they should be doing. And that's what happened in Ontario," he said.

"Because as soon as command table saw the figures that suggested that there could be an overall mortality of between 90 and 100,000, they moved very quickly to shut the schools, which was the right thing to do."

Modelling may be imperfect, but policy makers would basically be operating blind without them, says Dionne Aleman, an industrial engineering professor at the University of Toronto.

She notes these educated guesses can help answer big questions dogging many hospitals: When will the surge of COVID-19 patients arrive? Do we have enough intensive care beds? How many patients will need ventilators? Are there enough nurses?

Still, a model will only ever be as good as the data it's based on, and during a pandemic "it is essentially impossible to obtain real data," says Aleman, whose work has included building a simulation model of a hypothetical pandemic to explore how factors including transmission rates affect health-care demands.

"Real data wasn't really available for H1N1 which is just 10 years ago and it's not really available now," says Aleman, noting many holes in the COVID-19 statistics available for epidemiological study.

"A lot of it is just the date that the person became COVID-19 positive, how they contracted the disease, their age and their gender. But a lot of other information like comorbidities, such as (whether they had) asthma or diabetes, did they ultimately need hospitalization? Ventilators? A lot of that information is not really publicly available in the data sets.... It's not just that it's been stripped out, but it literally says: 'Not recorded' or 'Unknown,' which means that this information is just not known by the public health agencies."

Even with good data, models pretty much just summarize what we know at a given point in time, says Tuite. As more information is gathered, their findings will change.

Tuite cautions people against leaning on projections as fact. She also says people shouldn't discount them when their predictions don't pan out.

"The information that we get next week may change those projections. And that's OK," says Tuite.

"You've seen that in the U.K. where they're refining their estimates of expected mortality. They're not refining them because their models were wrong per se. They're refining them because they have additional information.... The ability to correct yourself or to adjust those estimates, is to me the sign of good science."

This report by The Canadian Press was first published April 4, 2020.