Fake photos, fake videos and now, fake stories.

The scope of high-tech deception keeps growing and experts say deepfakes could become the biggest threat to truth.

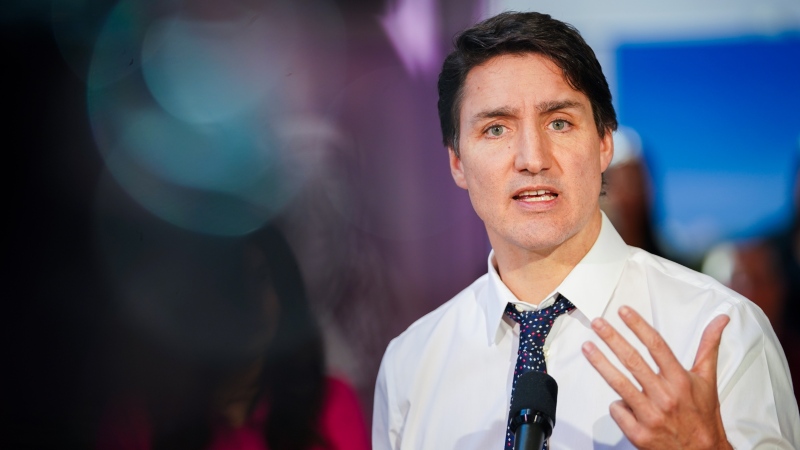

A deepfake is when an AI algorithm -- a neural network -- generates a video that never happened by either swapping one face for another or by allowing someone's motions and voice to be mapped onto another person.

"It's a video that has been created by essentially feeding in a computer algorithm lots of images of a person," said Andy Grotto, a national security expert and professor at Stanford University.

"It could be video, it could even be audio, and you feed it enough of that content and over time the algorithm learns how to mimic that content."

The technology that powers deepfakes is only growing more sophisticated. Initially, the videos weren't perfect. Early deepfake videos looked obviously doctored. But BuzzFeed produced a frighteningly realistic example last year by having actor and director Jordan Peele make a PSA about deepfakes as former U.S. president Barrack Obama.

Early 'deepfake' videos looked obviously doctored.

"We're entering an era in which our enemies can make it look like anyone is saying anything at any point in time," Peele as Obama said. "Even if they would never say those things."

"This is a dangerous time. Moving forward we need to be more vigilant with what we trust from the internet."

A video produced by BuzzFeed shows how far 'deepfake' videos have come.

It doesn't end with faked videos, however.

A website surfaced recently that uses an algorithm to create photographs of people who don't exist.

ThisPersonDoesNotExist.com uses an AI to piece together different parts of pictures to create fake people. It too isn't perfect but it will, as with deepfake videos, only get better over time.

An Uber software engineer has created a website that generates realistic human faces made by an artificial intelligence.

The latest development comes from San Francisco's OpenAI.

OpenAI created an algorithm that can produce written content that sounds remarkably like it was made by a human.

News website Axios fed some of its own content into the algorithm to see what it could produce, and what it came up with was remarkably believable and totally fabricated.

OpenAI has said it won't release the text-generating AI to the public because of how it can be abused to create fake news.

With a Canadian election in 2019 and a U.S. election in 2020, officials are now scrambling to counter this developing technology. The Canadian government has announced measures to fight this kind of interference going into the election this fall.