Scientists have created a new algorithm that captures human-like learning abilities. The algorithm enables computers to recognize and draw simple handwritten characters after exposure to just a few examples.

Their work, summarized in an article published Thursday in the journal Science, represents a major advancement in the field of machine learning.

The new algorithm, called “Bayesian Program Learning,” attempts to mimic the way humans learn new concepts. When humans are exposed to a new concept – say a new symbol or object– they can often recognize and understand the new concept after being exposed to it just a few times.

During a teleconference, U of T assistant computer science professor and article co-author Ruslan Salakhutdinov, gave the example of thumbs-up or high-five gestures that humans can recognize and perform as needed, after limited exposure to them.

While there are now machines and programs that can also learn to recognize symbols and patterns, for example object or speech recognition, these machines often depend on tens or hundreds of examples in order to function accurately.

But the new algorithm can recognize and learn a new concept after a limited number of examples, the authors of the study say. As well, the algorithm can perform more creative tasks beyond simple recognition. These tasks include generating new concepts, breaking down concepts into their respective parts, and understanding the relationship between those respective parts.

Salakhutdinov said the algorithm is a significant advancement in the field of artificial intelligence.

"It has been very difficult to build machines that require as little data as humans when learning a new concept," he said in a statement. "Replicating these abilities is an exciting area of research connecting machine learning, statistics, computer vision, and cognitive science."

Recognizing and reproducing handwritten characters

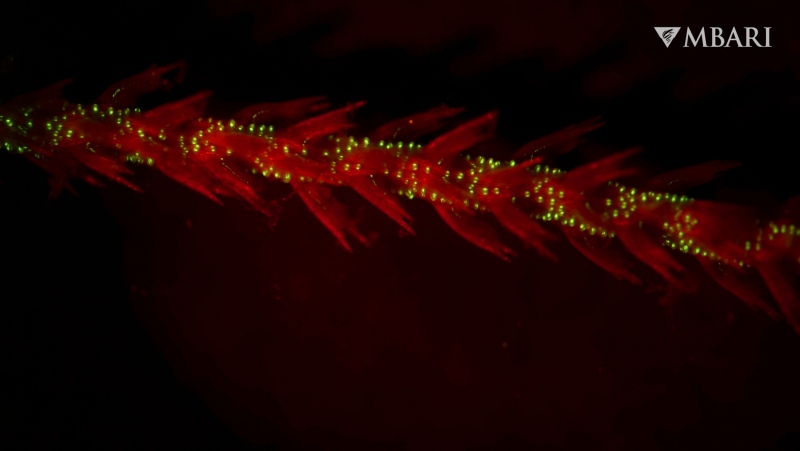

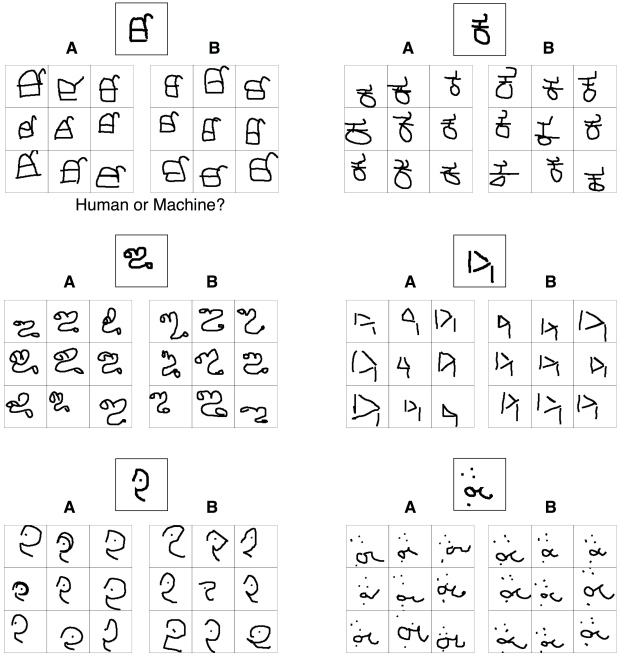

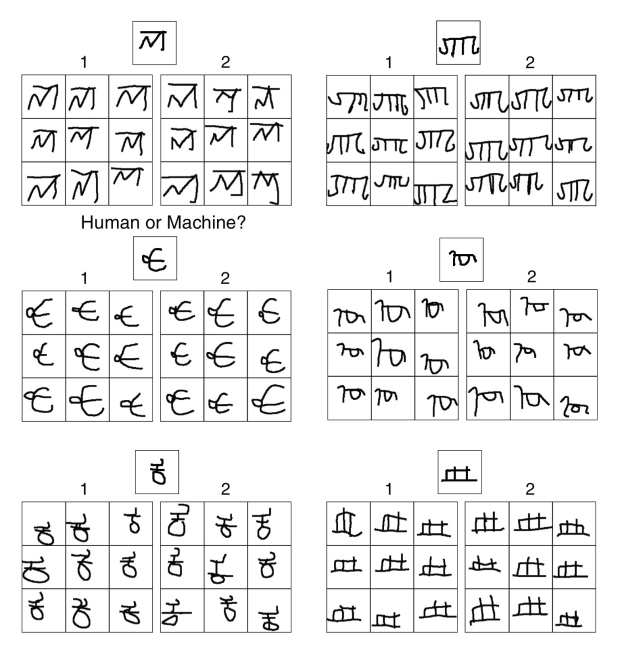

Using the algorithm, a computer was able to create a copy of handwritten characters, after being shown an example. In this case, the new characters were letters from different alphabets from around the world.

The computer was also able to mimic the way humans draw characters, by using similar pen strokes, stroke order and direction, the researchers said.

Finally, the computer was able to generate a totally new character when shown a set and asked to produce another one to be included in the set.

When the researchers compared the computer-generated results with characters produced by humans, they found them to be "mostly indistinguishable."

Can you tell the difference between humans and machines? Humans and machines were given an image of a novel character (top) and asked to produce new exemplars. The nine-character grids in each pair that were generated by a machine are (by row) B, A; A, B; A, B. (Photo courtesy Brenden Lake)

Can you tell the difference between humans and machines? Humans and machines were given an image of a novel character (top) and asked to produce new exemplars. The nine character grids in each pair that were generated by a machine are (by row) 1, 2; 2, 1; 1, 1. (Photo courtesy Brenden Lake)

Brenden Lake, the study's lead author and a Moore-Sloan Data Science Fellow at New York University, said the algorithm can lead to the development of better learning machines.

"Our results show that by reverse engineering how people think about a problem, we can develop better algorithms," he said in a statement. "Moreover, this work points to promising methods to narrow the gap for other machine learning tasks."

Joshua Tenenbaum, a professor at MIT in the Department of Brain and Cognitive Sciences and the Center for Brains, Minds and Machines and a co-author of the article, echoed the sentiment.

"I've wanted to build models of these remarkable abilities since my own doctoral work in the late nineties," he said in a statement. "We are still far from building machines as smart as a human child, but this is the first time we have had a machine able to learn and use a large class of real-world concepts – even simple visual concepts such as handwritten characters – in ways that are hard to tell apart from humans."

'Bayesian Program Learning'

The algorithm works by representing concepts as simple computer programs.

For example, the letter "A" is represented by computer code, which will generate examples of that letter when the code is run, NYU said in a statement released Thursday.

However, no human programmer is required during the learning process, rather, the algorithm programs itself by building code to produce the letter it sees. And, unlike standard computer programs that produce the same output every time, these special programs produce different outputs every time they’re run, the statement said.

This captures the variation that exists among concepts, such as the natural differences between how two people might draw the letter "A," the statement added.

In a video explaining his work, Lake said that while the algorithm only currently works for handwritten characters, it could eventually be used in other domains.

"The key point is that we need to learn the right form of representation, not just learning from bigger data sets, in order to build more powerful and more human-like learning patterns," he said.